Siemens – Siemens boosting U.S. investments by more than $10 billion for American manufacturing jobs, software and AI infrastructure

- With recent investments, Siemens surpasses $100 billion in total U.S. investment over the past 20 years

- New and expanded factories in Texas and California expected to create over 900 skilled manufacturing jobs

- More than doubling production capacity of electric equipment to power critical American infrastructure such as AI data centers

- Biggest-ever investment in industrial software and AI with planned acquisition of Altair Engineering

Siemens is ramping up investments in the U.S. to support and benefit from America’s industrial tech growth.

“The industrial tech sector is the basis to boost manufacturing in America and there’s no company more prepared than Siemens to make this future a reality for customers from small and medium sized enterprises to industrial giants,” said Roland Busch, President and CEO of Siemens AG.

Siemens Fort Worth Location USA

The U.S. is already the largest market for the company, relying on American talent and American supply chains. The recent investments in the company’s U.S. manufacturing footprint and the planned acquisition of Altair, a Michigan-based software company, amount to more than $10 billion.

This week, Siemens is unveiling two state-of-the-art manufacturing facilities for electrical products in Fort Worth, Texas, and Pomona, California. The $285 million investment is expected to create over 900 skilled manufacturing jobs. The equipment produced will support critical sectors such as the commercial, industrial and construction markets while powering AI data centers all over the country to support America’s leadership in the industrial AI revolution. With that Siemens is more than doubling its production capacity of electric equipment to power critical American infrastructure such as AI data centers.

Smarter software to help design America’s manufacturing renaissance

In October 2024, Siemens signed an agreement to acquire Altair. The combination with existing software from Siemens will create the world’s most complete AI-powered design and simulation portfolio. This will allow users in America and all around the world to design and manufacture more complex and smarter products faster – by simulating in the digital world, first. They could for example do a virtual crash-test for a new car design; or calculate in advance how a cell phone reacts to drops and thus develop the design optimally, before they build it in the real world. Powerful AI tools help along the way.

“We believe in the innovation and strength of America’s industry. That’s why Siemens has invested over $90 billion in the country in the last 20 years. This year’s investment will bring this number to over $100 billion. We are bringing more jobs, more technology and a boost to America’s AI capabilities,” said Roland Busch.

Siemens employs more than 45,000 people in the U.S. and is partnering with about 12,000 suppliers nationwide.

Wellwater Building Pamona USA

SourceSiemens

EMR Analysis

More information on Siemens AG: See full profile on EMR Executive Services

More information on Dr. Roland Busch (President and Chief Executive Officer, Siemens AG): See full profile on EMR Executive Services

More information on Ralf P. Thomas (Member of the Managing Board and Chief Financial Officer, Siemens AG): See full profile on EMR Executive Services

More information on Altair Engineering Inc.: https://altair.com/ + Altair is a global leader in computational intelligence that provides software and cloud solutions in simulation, high-performance computing (HPC), data analytics, and AI. Altair enables organizations across all industries to compete more effectively and drive smarter decisions in an increasingly connected world – all while creating a greener, more sustainable future.

Founded in 1985, Altair Engineering Inc. went public in 2017 (Nasdaq) and is headquartered in Troy, Michigan (USA). Out of its more than 3,500 employees, approximately 1,400 employees work in R&D.

More information on James R. Scapa (Founder, Chairman and Chief Executive Officer, Altair Engineering Inc.): https://investor.altair.com/corporate-governance/management + https://www.linkedin.com/in/james-scapa-56a4b527/

EMR Additional Notes:

- AI – Artificial Intelligence:

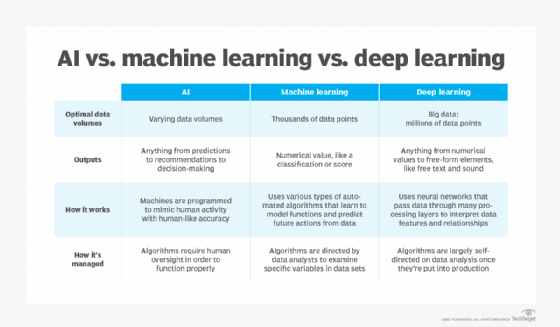

- Artificial intelligence is the simulation of human intelligence processes by machines, especially computer systems.

- As the hype around AI has accelerated, vendors have been scrambling to promote how their products and services use AI. Often what they refer to as AI is simply one component of AI, such as machine learning. AI requires a foundation of specialized hardware and software for writing and training machine learning algorithms. No one programming language is synonymous with AI, but well a few, including Python, R and Java, are popular.

- In general, AI systems work by ingesting large amounts of labeled training data, analyzing the data for correlations and patterns, and using these patterns to make predictions about future states. In this way, a chatbot that is fed examples of text chats can learn to produce lifelike exchanges with people, or an image recognition tool can learn to identify and describe objects in images by reviewing millions of examples.

- AI programming focuses on three cognitive skills: learning, reasoning and self-correction.

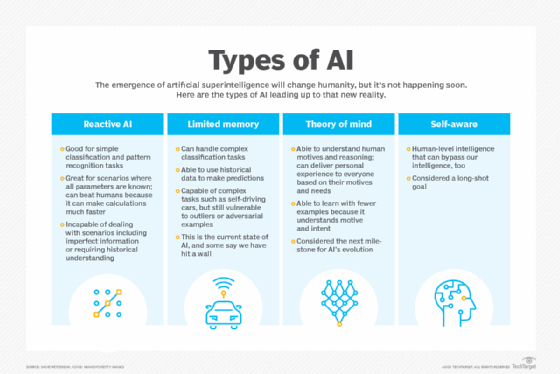

- What are the 4 types of artificial intelligence?

- Type 1: Reactive machines. These AI systems have no memory and are task specific. An example is Deep Blue, the IBM chess program that beat Garry Kasparov in the 1990s. Deep Blue can identify pieces on the chessboard and make predictions, but because it has no memory, it cannot use past experiences to inform future ones.

- Type 2: Limited memory. These AI systems have memory, so they can use past experiences to inform future decisions. Some of the decision-making functions in self-driving cars are designed this way.

- Type 3: Theory of mind. Theory of mind is a psychology term. When applied to AI, it means that the system would have the social intelligence to understand emotions. This type of AI will be able to infer human intentions and predict behavior, a necessary skill for AI systems to become integral members of human teams.

- Type 4: Self-awareness. In this category, AI systems have a sense of self, which gives them consciousness. Machines with self-awareness understand their own current state. This type of AI does not yet exist.

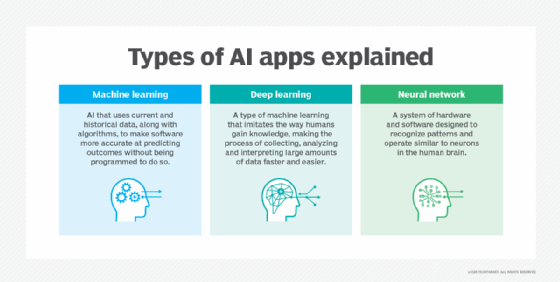

- Machine Learning (ML):

- Developed to mimic human intelligence, it lets the machines learn independently by ingesting vast amounts of data, statistics formulas and detecting patterns.

- ML allows software applications to become more accurate at predicting outcomes without being explicitly programmed to do so.

- ML algorithms use historical data as input to predict new output values.

- Recommendation engines are a common use case for ML. Other uses include fraud detection, spam filtering, business process automation (BPA) and predictive maintenance.

- Classical ML is often categorized by how an algorithm learns to become more accurate in its predictions. There are four basic approaches: supervised learning, unsupervised learning, semi-supervised learning and reinforcement learning.

- Deep Learning (DL):

- Subset of machine learning, Deep Learning enabled much smarter results than were originally possible with ML. Face recognition is a good example.

- DL makes use of layers of information processing, each gradually learning more and more complex representations of data. The early layers may learn about colors, the next ones about shapes, the following about combinations of those shapes, and finally actual objects. DL demonstrated a breakthrough in object recognition.

- DL is currently the most sophisticated AI architecture we have developed.

- Computer Vision (CV):

- Computer vision is a field of artificial intelligence that enables computers and systems to derive meaningful information from digital images, videos and other visual inputs — and take actions or make recommendations based on that information.

- The most well-known case of this today is Google’s Translate, which can take an image of anything — from menus to signboards — and convert it into text that the program then translates into the user’s native language.

- Machine Vision (MV):

- Machine Vision is the ability of a computer to see; it employs one or more video cameras, analog-to-digital conversion and digital signal processing. The resulting data goes to a computer or robot controller. Machine Vision is similar in complexity to Voice Recognition.

- MV uses the latest AI technologies to give industrial equipment the ability to see and analyze tasks in smart manufacturing, quality control, and worker safety.

- Computer Vision systems can gain valuable information from images, videos, and other visuals, whereas Machine Vision systems rely on the image captured by the system’s camera. Another difference is that Computer Vision systems are commonly used to extract and use as much data as possible about an object.

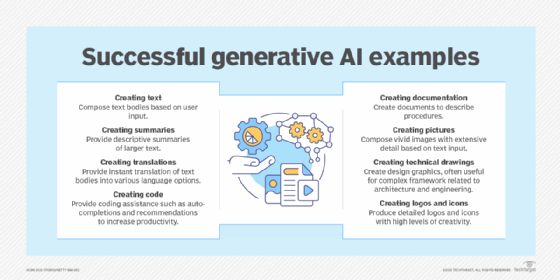

- Generative AI (GenAI):

- Generative AI technology generates outputs based on some kind of input – often a prompt supplied by a person. Some GenAI tools work in one medium, such as turning text inputs into text outputs, for example. With the public release of ChatGPT in late November 2022, the world at large was introduced to an AI app capable of creating text that sounded more authentic and less artificial than any previous generation of computer-crafted text.

- Edge AI Technology:

- Edge artificial intelligence refers to the deployment of AI algorithms and AI models directly on local edge devices such as sensors or Internet of Things (IoT) devices, which enables real-time data processing and analysis without constant reliance on cloud infrastructure.

- Simply stated, edge AI, or “AI on the edge“, refers to the combination of edge computing and artificial intelligence to execute machine learning tasks directly on interconnected edge devices. Edge computing allows for data to be stored close to the device location, and AI algorithms enable the data to be processed right on the network edge, with or without an internet connection. This facilitates the processing of data within milliseconds, providing real-time feedback.

- Self-driving cars, wearable devices, security cameras, and smart home appliances are among the technologies that leverage edge AI capabilities to promptly deliver users with real-time information when it is most essential.

- Multimodal Intelligence and Agents:

- Subset of artificial intelligence that integrates information from various modalities, such as text, images, audio, and video, to build more accurate and comprehensive AI models.

- Multimodal capabilities allows to interact with users in a more natural and intuitive way. It can see, hear and speak, which means that users can provide input and receive responses in a variety of ways.

- An AI agent is a computational entity designed to act independently. It performs specific tasks autonomously by making decisions based on its environment, inputs, and a predefined goal. What separates an AI agent from an AI model is the ability to act. There are many different kinds of agents such as reactive agents and proactive agents. Agents can also act in fixed and dynamic environments. Additionally, more sophisticated applications of agents involve utilizing agents to handle data in various formats, known as multimodal agents and deploying multiple agents to tackle complex problems.

- Small Language Models (SLM) and Large Language Models (LLM):

- Small language models (SLMs) are artificial intelligence (AI) models capable of processing, understanding and generating natural language content. As their name implies, SLMs are smaller in scale and scope than large language models (LLMs).

- LLM means large language model—a type of machine learning/deep learning model that can perform a variety of natural language processing (NLP) and analysis tasks, including translating, classifying, and generating text; answering questions in a conversational manner; and identifying data patterns.

- For example, virtual assistants like Siri, Alexa, or Google Assistant use LLMs to process natural language queries and provide useful information or execute tasks such as setting reminders or controlling smart home devices.